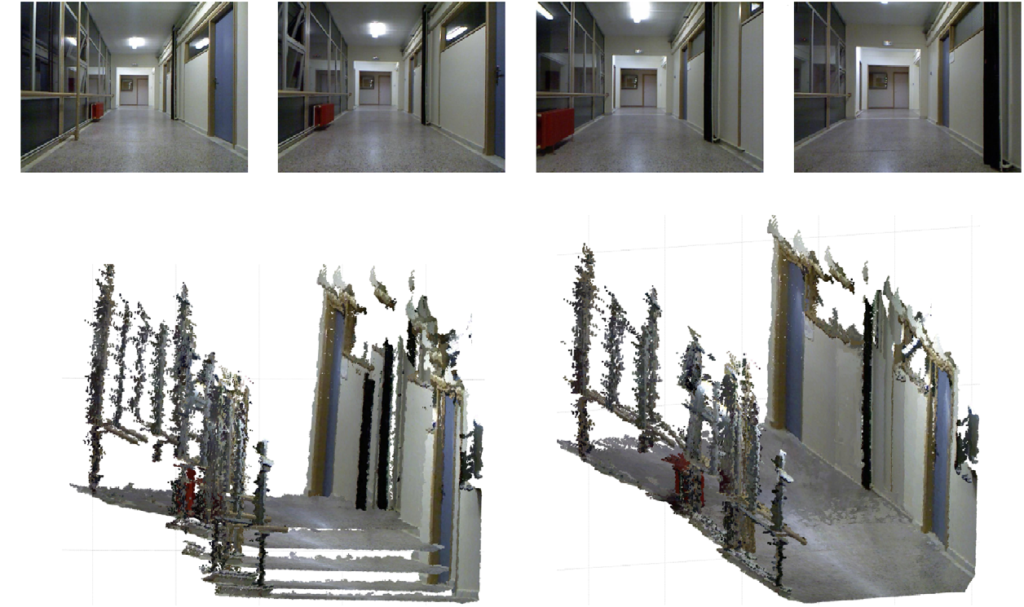

The simultaneous localization and mapping (SLAM) problem is that of estimating the robot’s position and progressively building a map of its environment. The difficulties of solving SLAM arise from the finite precision of the sensors and actuators of the robot, given real-life situations. For the low-layer navigation module, a SLAM algorithm with sensory input from an RGB-D camera is utilized. It is decomposed into five subordinate modules. The first step comprises the detection and matching of features in successive color images. By evaluating the depth images of these feature points, a set of point-wise 3D correspondences between the consequent frames is obtained. As a matter of fact, two successive dense point clouds can be formed from the respective depth maps of the RGB-D sensor. Right afterward, those 3D points are fed into a motion estimation routine, which nevertheless is not necessarily globally consistent, thereupon the result is refined in the fourth and fifth subordinate modules. In particular, two consecutive point clouds are first utilized and, by applying a simple RANSAC plane detection routine on both of them, only the subset of the 3D points that belong to the detected surfaces is retained. In the next step, these subsets of points are fed to an ICP algorithm to produce accurate refinements to the initial coarse motion estimation. The output of the algorithm at this stage is a globally consistent 3D model of the perceived environment, represented as a point cloud.