Self-localization is a challenging issue for autonomous robots moving in unknown environments and possessing no prior knowledge of the scene or the robot’s motion. Visual odometry can provide the means to cope with such a challenge, as it comprises the estimation of the full pose (orientation and position) of a camera placed onboard a robot by analyzing a sequence of images.

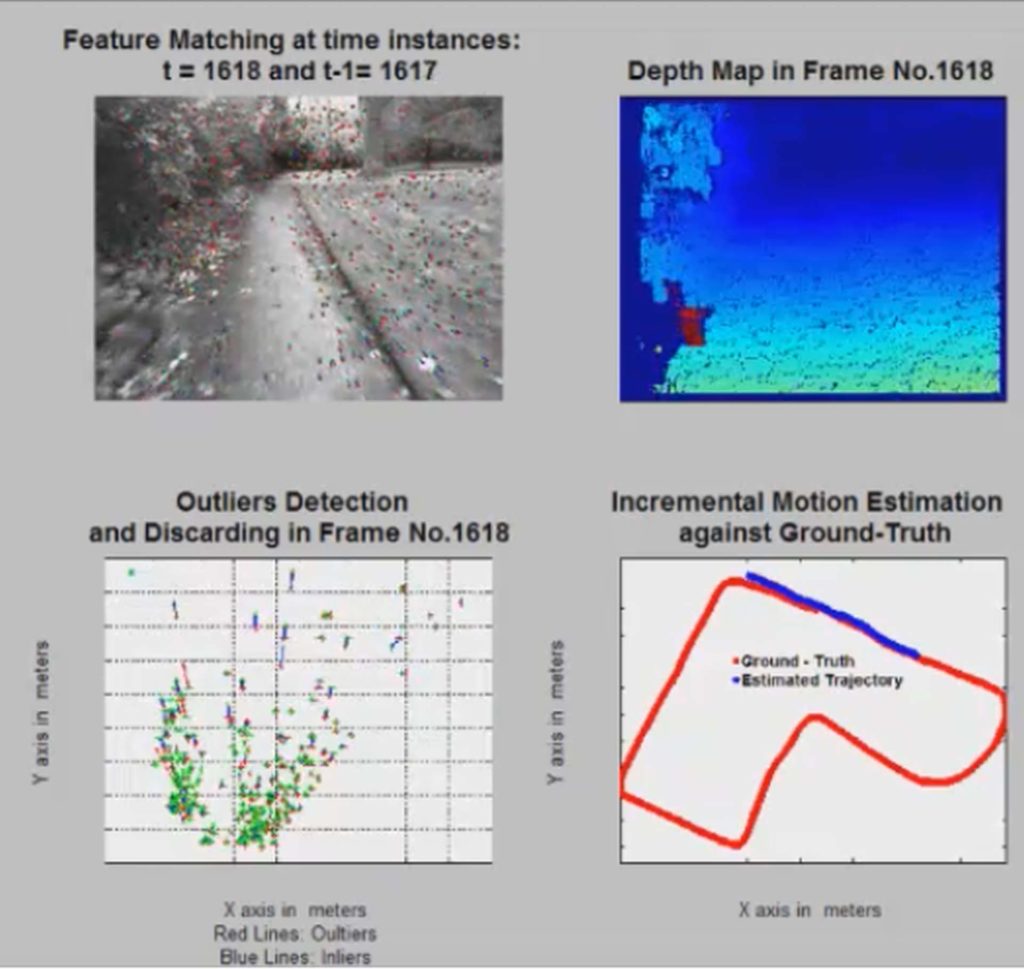

The following work proposes a computationally-efficient accurate visual odometry algorithm, utilizing solely stereo vision, without any additional inertial measurement unit or GPS sensor. The contribution of this visual odometry framework is twofold: Firstly, it involves the development of a non-iterative outlier detection technique, which efficiently discards the outliers of the matched features between two successive frames. Secondly, it introduces a hierarchical motion estimation approach, where the camera path is computed incrementally, and on each step refinements to the global orientation and position of the robot are produced. The accuracy of the proposed system has been extensively evaluated and compared with state-of-the-art visual odometry methods and DGPS benchmark routes. Experimental results that correspond to harsh terrain routes including 210m simulated (i.e. Simulated dataset #1 and Simulated dataset #2) and 647m real outdoors data (The New College dataset) are presented exhibiting a remarkable positioning accuracy of 1.1%.